Introduction to Anime Coloring and Machine Learning

Anime coloring machine learning – Anime coloring, a crucial aspect of anime production, traditionally involves a meticulous process of hand-coloring cels or digital painting, often requiring significant time and artistic skill. Color choices, shading techniques, and the overall aesthetic contribute significantly to the final look and feel of the animation. This painstaking process, however, can be both time-consuming and expensive, limiting the potential for faster production cycles and wider accessibility to anime creation.

Machine learning offers a powerful alternative, potentially revolutionizing this aspect of the anime industry.The application of machine learning to anime coloring offers several significant advantages. Automated coloring can drastically reduce production time and costs, allowing for quicker turnaround times and increased output. Moreover, it can provide consistent coloring across a large number of frames, ensuring a uniform aesthetic that would be difficult to achieve manually, especially in long-running series.

Machine learning algorithms can also assist artists by automating tedious tasks like line art cleanup and providing intelligent suggestions for color palettes and shading, thereby freeing up artists to focus on more creative aspects of the process. This collaborative approach enhances artistic productivity and allows for exploration of new stylistic choices.

Anime coloring, a popular hobby, is seeing advancements through machine learning techniques that automate and enhance the process. For those wanting to practice traditional methods before diving into AI-assisted coloring, readily available resources like this anime coloring book download site offer a great starting point. These digital downloads provide ample practice material, allowing individuals to hone their skills before applying machine learning tools to their anime coloring endeavors.

Traditional Anime Coloring Techniques

Traditional anime coloring techniques varied depending on the era and production method. Early anime often utilized hand-painted cels, where individual transparent sheets were painted with specific colors and then layered over each other to create depth and complexity. This process was incredibly labor-intensive, requiring skilled artists and a significant amount of time. The advent of digital technology introduced digital painting software, enabling artists to color directly onto computer screens, improving efficiency and allowing for greater control over color and shading.

However, even with digital methods, coloring remains a time-consuming process requiring expertise in color theory and digital painting techniques. Consistent application of style guidelines across numerous frames and characters remained a challenge.

Benefits of Machine Learning in Anime Coloring

Machine learning algorithms, trained on large datasets of anime images, can learn to recognize and replicate the stylistic choices of human artists. This allows for the automation of many aspects of the coloring process, such as base color application, shading, and even the generation of different color variations. For example, a machine learning model could be trained on a specific anime style to automatically color line art in a consistent manner, reducing the time spent on manual coloring by a significant margin.

This increased efficiency translates directly to cost savings for studios. Furthermore, AI-assisted tools can provide artists with creative suggestions, exploring color palettes and shading options that they may not have considered, expanding artistic possibilities.

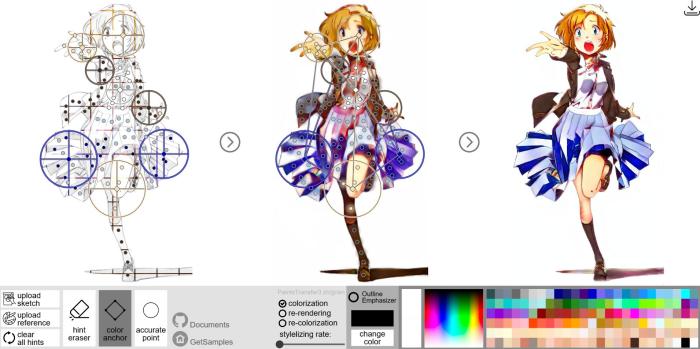

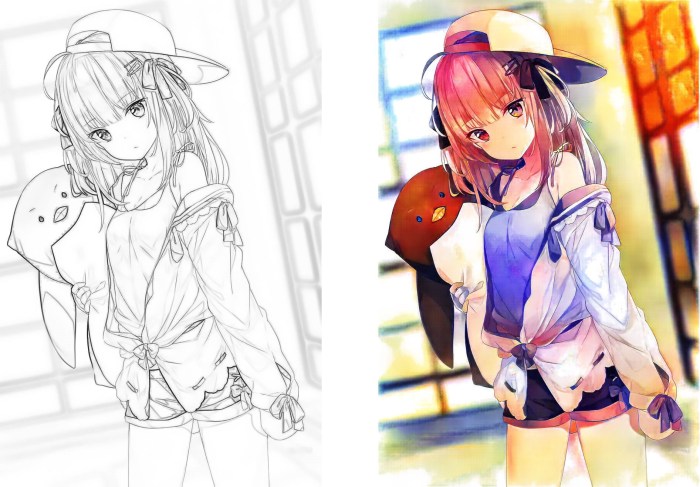

Current State of AI-Assisted Art Tools and Their Relevance to Anime

Currently, several AI-assisted art tools are emerging, offering varying degrees of automation in the coloring process. These tools often utilize techniques like Generative Adversarial Networks (GANs) and convolutional neural networks (CNNs) to learn the characteristics of different anime styles and apply them to new images. While these tools are not yet perfect and often require human oversight, they are rapidly improving and becoming increasingly sophisticated.

For example, some tools can automatically color line art based on a provided style reference, while others can suggest color palettes or automatically generate variations of an existing image. The relevance to anime is substantial; these tools can streamline the production pipeline, enhance the creative process, and ultimately contribute to the creation of higher-quality anime with reduced production costs and faster turnaround times.

The continued development and refinement of these AI-assisted tools will undoubtedly play a significant role in shaping the future of anime production.

Data Requirements and Preprocessing: Anime Coloring Machine Learning

Training a robust anime coloring model requires a substantial and carefully curated dataset. The quality of the data directly impacts the model’s ability to accurately and consistently color anime-style images. Preprocessing steps are crucial to ensure data consistency and improve model performance, minimizing noise and maximizing learning efficiency.The quantity and type of data needed are critical considerations. A larger, more diverse dataset generally leads to better generalization, enabling the model to handle a wider range of anime styles and coloring techniques.

Preprocessing is vital to standardize the data, making it suitable for training. This includes steps like cleaning, resizing, and format conversion, ensuring all images are compatible with the chosen machine learning framework.

Data Types and Quantities

The ideal dataset would consist of pairs of grayscale anime line art images and their corresponding colored counterparts. A minimum of several thousand image pairs is recommended for a reasonable model. However, significantly larger datasets (tens of thousands or more) will generally yield superior results. The dataset should represent a variety of anime styles, character designs, backgrounds, and coloring palettes to ensure the model learns to generalize well across different scenarios.

Consider incorporating images with different levels of detail and complexity to improve robustness. For example, a dataset could include images from various anime series, fan art, and manga. The ratio of training, validation, and testing sets should be carefully considered to avoid overfitting and ensure reliable evaluation. A common split is 80% training, 10% validation, and 10% testing.

Image Preprocessing Steps

Several preprocessing steps are essential to prepare the data for model training. These steps help standardize the data, reduce noise, and improve model performance.

- Image Cleaning: This involves removing any artifacts or imperfections from the images, such as noise, blurriness, or inconsistencies in line art. This could involve using image filtering techniques to enhance the clarity and definition of the line art and color images.

- Resizing: All images should be resized to a consistent resolution. This ensures uniformity and prevents the model from being biased towards images of specific sizes. A common approach is to choose a resolution that balances computational efficiency and image detail.

- Format Conversion: All images should be converted to a common image format, such as PNG or JPG, to ensure compatibility with the machine learning framework. PNG is often preferred for its lossless compression and support for transparency.

- Data Normalization: Pixel values should be normalized to a specific range (e.g., 0-1) to improve model training stability and efficiency. This ensures that all features contribute equally to the learning process and prevents bias towards images with higher pixel values.

Data Augmentation Strategies

Data augmentation techniques artificially increase the size and diversity of the training dataset. This helps improve model robustness and generalization by exposing the model to a wider range of variations in the input data.

- Random Cropping: Randomly cropping portions of the images and their corresponding color versions introduces variations in composition and perspective.

- Random Flipping: Horizontally flipping images and their color counterparts creates mirrored versions of the original data.

- Color Jittering: Introducing slight variations in brightness, contrast, saturation, and hue adds robustness to color variations in the input images.

- Rotation: Rotating images by small random angles helps the model to learn to be invariant to minor changes in orientation.

Example Datasets and Sources

While readily available, large, pre-labeled datasets specifically for anime coloring are relatively scarce. Building a custom dataset often involves collecting images from various online sources, such as fan art websites, anime databases, and manga websites. Careful consideration must be given to copyright and usage rights when creating such a dataset. One strategy is to use publicly available line art and then create the corresponding color versions manually or by employing a simpler coloring model as a starting point.

Another approach is to use a combination of public domain images and those with permissive licenses, ensuring proper attribution. Danbooru is an example of a website containing a large amount of anime images, though careful filtering and permission checking would be required.

Future Directions and Applications

The field of anime coloring using machine learning is rapidly evolving, promising significant advancements in both the quality and efficiency of anime production. Current methods lay a strong foundation for more sophisticated applications beyond simple colorization, opening doors to innovative tools for artists and animators. The potential for creative expansion and automation is immense.The application of machine learning to anime coloring extends far beyond simply filling in base colors.

Future developments will likely see a convergence of techniques, leading to more nuanced and expressive results. This will allow for greater artistic control and efficiency in the animation workflow.

Advanced Style Transfer and Customization

Style transfer algorithms, already used in image editing, can be adapted to anime coloring to allow artists to easily apply the stylistic choices of different anime series or individual artists to their work. Imagine an artist wanting to recolor their artwork in the style of Studio Ghibli or a specific manga artist; this technology could make such stylistic changes seamless and intuitive.

This would also allow for the creation of unique, hybrid styles by blending elements from various sources. The potential for experimentation and artistic exploration is vast.

Automatic Line Art Generation, Anime coloring machine learning

While current methods focus on coloring existing line art, future research could explore the automatic generation of line art itself. This would involve training models to understand the characteristics of anime line art styles, enabling the creation of clean, consistent line work from rough sketches or even descriptions. This could significantly streamline the animation process, particularly for independent animators or smaller studios with limited resources.

Imagine a system that takes a basic sketch and automatically generates several variations of the line art, allowing the artist to select their preferred style.

Improved Accuracy and Efficiency

Several avenues of research can significantly improve the accuracy and efficiency of anime coloring algorithms.

- Enhanced Data Augmentation Techniques: Developing more sophisticated data augmentation methods can address the issue of limited training data, a common challenge in specialized domains like anime coloring. This might involve techniques that generate variations of existing anime images while preserving stylistic integrity.

- Improved Model Architectures: Exploring novel neural network architectures, such as transformers or generative adversarial networks (GANs) tailored to the specifics of anime coloring, could lead to more accurate and detailed colorizations. This could involve focusing on models that better understand the complex relationships between lines, shapes, and colors in anime artwork.

- Real-time Processing: Optimizing algorithms for real-time performance would enable their integration into live animation workflows, allowing artists to see the colorization results instantly. This would require significant advancements in computational efficiency and algorithm design.

Integration with Existing Animation Software

Future development should focus on seamless integration with existing animation software packages. This would allow animators to utilize these machine learning tools directly within their familiar workflows, avoiding the need for complex data transfer or separate software. Such integration would maximize the usability and accessibility of these powerful tools for a wider range of artists and studios.

FAQ Guide

What are the ethical considerations of using AI for anime coloring?

Ethical concerns include potential copyright infringement if AI models are trained on copyrighted artwork without permission and the impact on the livelihoods of human colorists. Careful consideration of data sourcing and responsible AI development is crucial.

How computationally intensive is training an anime coloring model?

Training a robust anime coloring model can be computationally expensive, requiring significant processing power and potentially specialized hardware like GPUs. The complexity increases with the size and resolution of the training dataset and the sophistication of the model architecture.

Can this technology be used for other art styles besides anime?

Yes, the underlying machine learning techniques can be adapted to other art styles, although the training data would need to be specific to the desired style. The principles of color palette generation and image manipulation are broadly applicable.